Anonymous Airlines Training Evaluation

BACKGROUND

Program and Stakeholders

Anonymous Airline’s (AA) (a pseudonym) has a Customer Service Agent (CSA) training program to provide newly hired agents with the skills and knowledge necessary to execute a broad range of job responsibilities in a airport hub that connect passengers to their flights. Since its inception in the 1960s, this program has evolved into a two-week model that involved the following stakeholders:

- Upstream consumers: CSA leadership team, full-time trainers, part-time trainers

- Downstream consumers ( Direct Impactees): CSA agents, gate agents

- Downstream consumers (Indirect Impactees): airport hub operations, pilots, stewardesses, ticket holders

To protect AA’s identity and ensure their daily operations went uninterrupted, we agreed to their terms for an evaluation, anonymity and data collection outside of work times.

EVALUATION METHODS

Evaluation Purpose and Type

The CSA program has been in operation for more than 64 years, and trained thousands of CSAs. Therefore the evaluators utilized several types of evaluation methods to define the CSA training effectiveness.

- Summative: To evaluate what occurred after the CSA program was implemented.

- Goal-Based Evaluation: To check how training aligns with CSA performance by using specific metrics to measure success.

- Goal-Free Evaluation: To be open to shifting goals and possible side effects from CSA training.

- Back-End Evaluation: To understand what happened, why it happened, and what can be improved.

Dimensions and Data Collection Methods

The evaluation process then began with Brinkerhoff’s Training Impact Model to collaborate with the client, and develop a targeted dimension to evaluate. Based on the conversations and information obtained during the interview with the client, the evaluation team developed one key dimension to analyze.

- The effectiveness of training content/delivery on learning and job performance: How well is the training content designed and delivered to facilitate learning and job performance, while supporting company policy and FAA standards? (Outcome, Most Important)

The team then used Kirkpatrick’s four levels of evaluation to create questions to identify level one (reaction) and level three (behavior) data. The level one questions focused on the trainees’ satisfaction with the program. Level three focused on feedback on how well CSAs completed their tasks after training.

Throughout the process the evaluation team maintained the International Society for Performance Improvement (ISPI), Collaborative Institutional Training Initiative (CITI) ethical standards and FAA airline standards of conduct. To ensure unbiased responses and anonymity, the team provided anonymous surveys, and the two evaluators who were not AA employees conducted the interviews to collect data from current CSAs and CSA Supervisors.

RESULTS

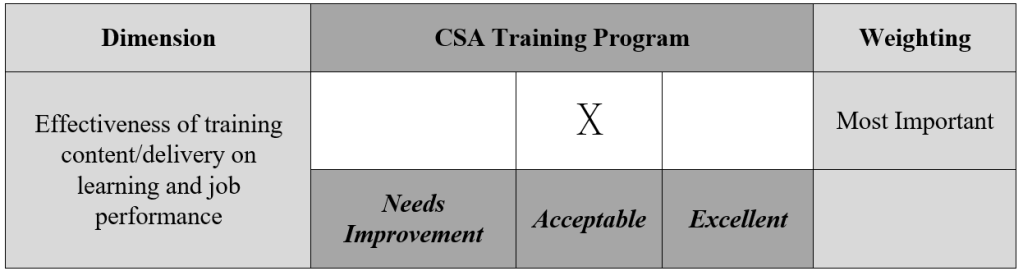

The training program in terms of its effectiveness on learning and job performance received an overall quality rating of “Acceptable” (Table 1) Feedback was more positive than negative, with one area flagged for improvement.

Table 1: Dimensional result for training program

More Practical Training

First and foremost, the CSA agents requested more time to practice, specifically controlling the jetbridge, an enclosed connector that links an airport terminal gate to an airplane.

When properly setup, jetbridges allow passengers to board and disembark. Improper setup can damage an airplane or potentially make a connection unsafe.

Feedback also included recommendations for mentoring, and different ways to practice before they completed the training.

Conclusions

Since concluding the evaluation, the team lead shared that her station leaders asked to meet for specific recommendations. This was taken as good news by the entire team, as we had to adjust the evaluation to balance AA’s requests, CSA’s needs, and completion time.

The original proposal had three dimensions, but AA’s request for anonymity, and the exploratory interview prompted the evaluators to consolidate their dimensions. To ensure participation, the CSA interviews were handled by phone and fully anonymous surveys were mobile friendly. However, anonymous surveys have a low completion rate, so we had to rally the local CSAs to complete them.

Although the report was received favorably, this evaluation was limited. It will need more data, such as reviewing that actual training, and interviewing more people, specifically upstream and downstream indirect impactees.

REFERENCES

Chyung, S. Y. (2019). 10-step evaluation for training and performance improvement.

Kirkpatrick, D. (1996). Evaluating training programs: The four levels. Berrett-Koehler. American Evaluation Association Guiding Principles for Evaluators. (2008). American Journal of Evaluation, 29(3), 233–234. https://doi.org/10.1177/10982140080290030201